mTLS on My Mind

This Saturday I woke up with mTLS on my mind. I even dreamed about it, although I can’t recall the details. I have been facing a problem recently where a collection of containers running on ECS is blocked from moving to a Kubernetes cluster I operate because terminating TLS at the ingress controller (ingress-nginx) is not considered adequate for HIPAA transport encryption requirements because communication between the ingress controller and pod is not encrypted. I’ll be honest, I’m not a HIPAA expert so I’m not in a position to argue with this requirement. I have certainly heard the argument that encrypting traffic between the ingress controller and pod is not necessary because the traffic is sequestered to a private virtual network. However, consider a multi-tenant cluster where applications owned by different teams should not see each other’s traffic. I decided to see if I could set up mTLS in my own cluster for hosting this web site to see how difficult the task is.

I woke up around 9:30AM. I got out of bed around 10:30AM. I drank all the coffee by 11:30AM. I’ll admit by this time I was teetering back and forth between finding a solution and arguing that it wasn’t needed, while wondering how hard it could really be to set all of this up.

I was drawn to Linkerd as a potential solution to my problem because of the automatic mTLS feature of Linkerd. In short, by adding a simple annotation to a deployment or namespace, Linkerd will automatically add a proxy sidecar to each container in the deployment or namespace.

apiVersion: v1

kind: Namespace

metadata:

annotations:

linkerd.io/inject: enabled

Can it really be that easy?

That sounds like an easy solution. Almost too easy. I got to work creating a new Terraform module to install the Linkerd helm chart in my cluster. I also installed step and linkerd CLI tools. Around noon I had a slice of pizza from the night before that I microwaved for fifty seconds.

I will admit there was a fair amount of iterating to get my module working, but I had Linkerd and Linkerd Viz installed by 1:00PM. I decided to reward myself with a beer for all my hard work, and because I had a headache from the beer I drank the night before. I had a Ninkasi Total Domination IPA.

By 2:00PM I figured out why the magical namespace annotation was not automagically attaching Linkerd sidecar proxies to my containers. I needed to restart my Deployments and ReplicaSets in order for the Linkerd sidecar injector to add the proxy sidecar. After restarting my ingress controller, website Deployment, and database StatefulSet, I had enabled mTLS between my ingress controller and my WordPress pod.

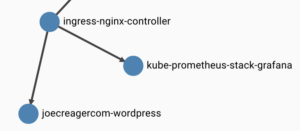

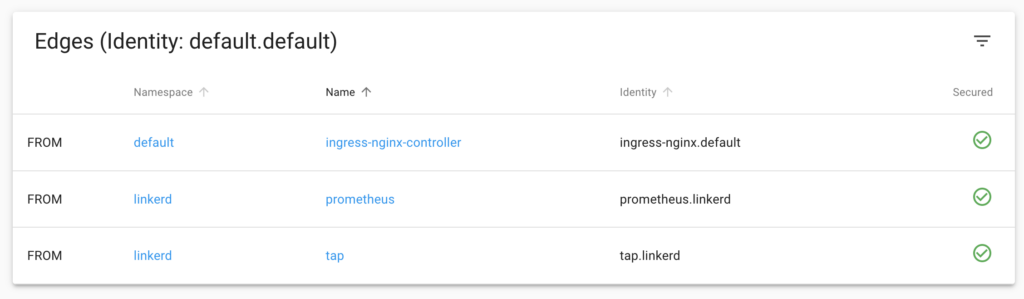

I ran linkerd viz dashboard to see what I had. The first think I noticed that I really appreciated was a network diagram showing the mesh between my ingress controller and website. The dashboard also made it evident whether or not a pod was part of a mesh. Figure 2 shows the connections made to the deployment for my website and whether or not that connection is secured with mTLS.

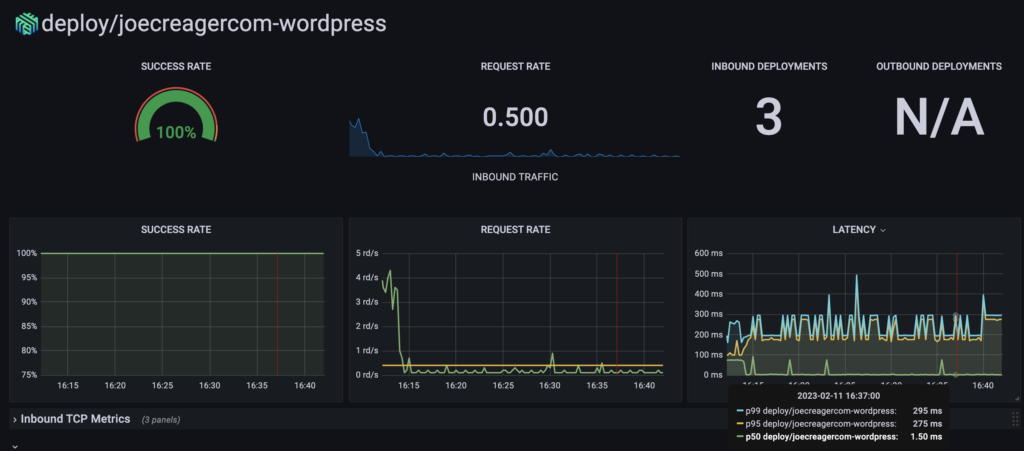

As satisfied as I was with my progress, I felt I was still not taking full advantage of what Linkerd can offer. Linkerd Viz ships with it’s own instance of Prometheus, and there are a collection of dashboards that leverage the data collected this way. You can find them here.

By 3:00PM I had the Linkerd Grafana dashboards set up. I was lazy and elected not to create IaC for these dashboards for now. Hey, it’s Saturday.

Was there a price to pay?

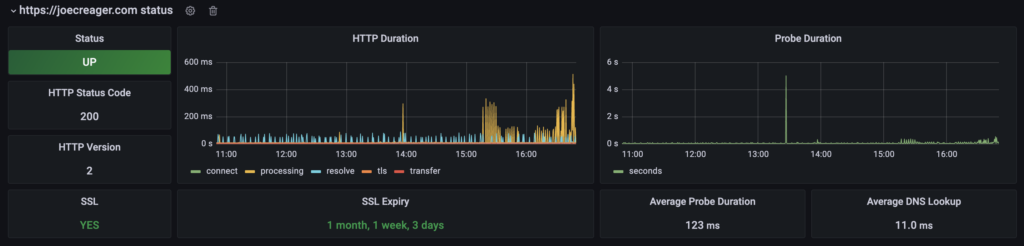

You might be wondering how all of this affected the latency of my site. I track the response time of my site with blackbox_exporter and was able to capture how this change affected the latency.

As you can see from Figure 4, there was slight increase in latency after adding mTLS between the ingress controller and pod for my website. The very big spike in latency was when I restarted my ingress controller to add the Linkerd proxy. The most notable increase in latency is due to spike sin TLS latency during requests. I will continue to monitor this, but it seems very tolerable in the grand scheme of things.

Conclusion

Well, that was easy. However, I wouldn’t call my implementation production ready. Here are a few things you will want to do if you decide to adapt this in a production setting.

- Pass the mTLS certs and key via a Kubernetes Secret rather than a raw file. Ideally this will be securely stored in a secrets vault of some form with some kind of rotation policy.

- Stored the Grafana dashboards as ConfigMap.

- Configure the Linkerd control plane for high availability.

Overall, I found Linkerd to be very easy to configure. I was able to set up mTLS between an ingress controller and pod on a Saturday afternoon. The extra monitoring dashboards are icing on the cake. If you are facing as similar compliance conundrum, give Linkerd a look.